Force Input Device for Graphical Environment Tweaking (FIDGET)

From The Theme

SENSING AND COMPUTING

WHAT IF

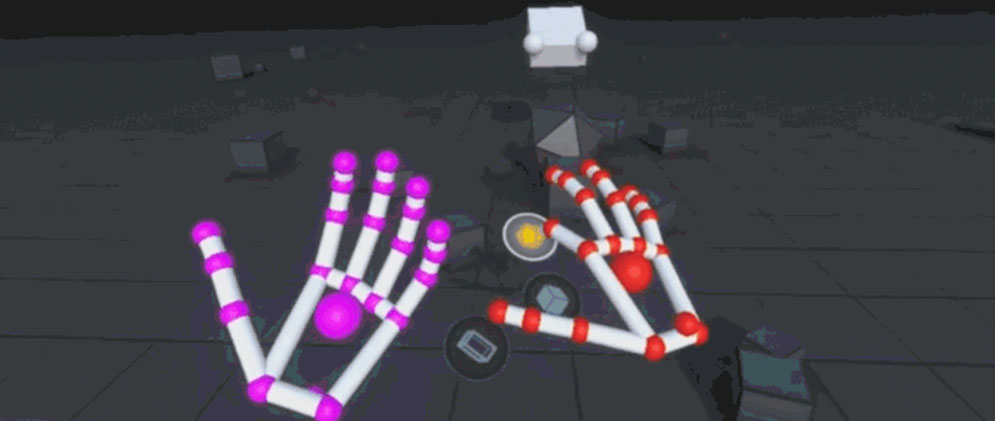

What if we could provide natural interactions with 3D environments?

WHAT WE SET OUT TO DO

We set out to develop a handheld physical input device that would allow designers to use their dexterity—the whole hand, both hands, perhaps even the whole body— in interacting with graphical computing systems. The proposed two-handed Force Input Device for Graphical Environment Tweaking (“FIDGET”) would be designed to sense manipulations such as bending, pulling, and twisting that our current-day mice and keyboard cannot, widening the range of what designers can create by widening the capabilities of their tools. The goal of this project was to generate several working prototypes that explore form factors, sensing technologies and interaction techniques for the FIDGET concept.

WHAT WE FOUND

We developed a rough framework for understanding possible modes of physical interaction that users might have with a handheld device, and mapped it to different functions common to graphic manipulation, particularly Computer Aided Design (CAD).

We built eleven FIDGET prototypes, each based roughly on the shape of a pen, and each capable of capturing some aspect of dexterous manual input. Each FIDGET is based on a common FIDGETboard architecture, which includes a microprocessor to capture sensor data, our self-designed communication protocol, and graphical prototyping software on a host PC.

Each individual prototype explores the application of some sensor to some mode of physical input through the FIDGET device. The wide range of prototype devices built shows the flexibility of the embedded system architecture, communication protocol and graphical environment we selected. Our initial prototype work helps to show how different sensors can be used; future work can build upon these insights to develop more integrated devices with a larger range of possible inputs. Among the additional areas that may be explored in the realm of FIDGET devices are haptic feedback, the enabling of multiple serial channels for interacting devices, and wireless communications.

LEARN MORE

Interaction Devices for Hands-On Desktop Design. (2003). Ju, W., Madsen, S., Fiene, J., Bolas, M., McDowall, I., Faste, R. Proc. SPIE 5006, Stereoscopic Displays and Virtual Reality Systems X.

PEOPLE BEHIND THE PROJECT

Larry Leifer is Professor of Mechanical Engineering and Founding Director for the Center for Design Research at Stanford University. Dr. Leifer’s engineering design thinking research is focused on instrumenting design teams to understand, support, and improve design practice and theory. Specific issues include: design-team research methodology, global team dynamics, innovation leadership, interaction design, design-for-wellbeing, and adaptive mechatronic systems.

Larry Leifer is Professor of Mechanical Engineering and Founding Director for the Center for Design Research at Stanford University. Dr. Leifer’s engineering design thinking research is focused on instrumenting design teams to understand, support, and improve design practice and theory. Specific issues include: design-team research methodology, global team dynamics, innovation leadership, interaction design, design-for-wellbeing, and adaptive mechatronic systems.

Mark Bolas is a researcher exploring perception, agency and intelligence. His work focuses on creating virtual environments and transducers that fully engage one’s perception and cognition and create a visceral memory of the experience. At the time of this proposal, he was a Lecturer in Stanford’s Product Design program, and co-founder of Fakespace Labs in Mountain View, California, building instrumentation for research labs to explore virtual reality. In 2017, he is the Associate Director for mixed reality research and development at the Institute for Creative Technologies at USC, and an Associate Professor of Interactive Media in the Interactive Media Division of the USC School of Cinematic Arts.

Mark Bolas is a researcher exploring perception, agency and intelligence. His work focuses on creating virtual environments and transducers that fully engage one’s perception and cognition and create a visceral memory of the experience. At the time of this proposal, he was a Lecturer in Stanford’s Product Design program, and co-founder of Fakespace Labs in Mountain View, California, building instrumentation for research labs to explore virtual reality. In 2017, he is the Associate Director for mixed reality research and development at the Institute for Creative Technologies at USC, and an Associate Professor of Interactive Media in the Interactive Media Division of the USC School of Cinematic Arts.

David Kelley is the founder and chairman of the global design and innovation company IDEO. Kelley also founded Stanford University’s Hasso Plattner Institute of Design, known as the d.school. As Stanford’s Donald W. Whittier Professor in Mechanical Engineering, Kelley is the Academic Director of both of the degree-granting undergraduate and graduate programs in Design within the School of Engineering, and has taught classes in the program for more than 35 years.

David Kelley is the founder and chairman of the global design and innovation company IDEO. Kelley also founded Stanford University’s Hasso Plattner Institute of Design, known as the d.school. As Stanford’s Donald W. Whittier Professor in Mechanical Engineering, Kelley is the Academic Director of both of the degree-granting undergraduate and graduate programs in Design within the School of Engineering, and has taught classes in the program for more than 35 years.

Ian McDowall is CEO and co-founder of Fakespace Labs in Mountain View, California, building instrumentation for research labs to explore virtual reality. He is also a Fellow at Intuitive Surgical, working on intelligent robotic technologies.

Ian McDowall is CEO and co-founder of Fakespace Labs in Mountain View, California, building instrumentation for research labs to explore virtual reality. He is also a Fellow at Intuitive Surgical, working on intelligent robotic technologies.