Detection of Comprehension & Emotion from Real-time Video Capture of Facial Expressions

From The Theme

HUMAN MACHINE INTERACTION AND SENSING

WHAT IF?

What if we could automatically detect how well someone is comprehending information presented on a computer screen, based on the emotions they display?

WHAT WE SET OUT TO DO

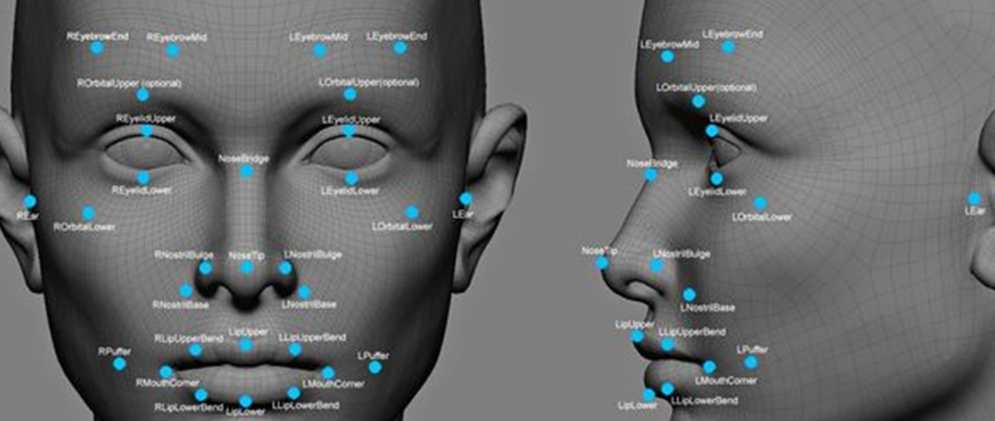

We set out to assess the effects of emotion on comprehension, using educational systems already in place at Stanford’s Virtual Human Interaction Laboratory. The four part strategy included: 1) tracking a learner’s face during a comprehension task; 2) capturing facial expressions and categorizing the learner’s emotions; 3) investigating how emotions effect comprehension; and finally; 4) measuring the effects of changing the learning system in real time, based on learner emotions.

WHAT WE FOUND

We used machine learning algorithms to develop automated, real-time models that analyzed subjects’ emotional states based on facial expressions and physiological measurements. We created two types of evaluations – models specific to the subject, as well as general models. Our approach predicted emotion type (amusement versus sadness) and intensity level, with immediate comparisons against trained coders’ assessments.

Results demonstrated good fits for the models overall, with better performance for emotion categories than for emotion intensity. Amusement ratings were more accurate than sadness ratings. The full model using physiological measures as well as facial tracking performed better than facial tracking alone, and our person-specific models performed better than general models.

LEARN MORE

Kizilcec, R.F., Bailenson, J.N., & Gomez, C.J. (2015). “The Instructor’s Face in Video Instruction: Evidence from Two Large-Scale Field Studies.” Journal of Educational Psychology. 107(3), 724-739.

Jabon, M.E., Ahn, S.J., & Bailenson, J.N. (2011). “Automatically Analyzing Facial-Feature Movements to Identify Human Errors.” IEEE Journal of Intelligent Systems, 26 (2), 54-63.

Jabon, M.E., Bailenson J.N., Pontikakis, E.D., Takayama, L., & Nass, C. (2011). Facial Expression Analysis for Predicting Unsafe Driving Behavior. IEEE Pervasive Computing, 10 (4), 84-95.

Ahn, S.J., Bailenson, J.N., Fox. J, & Jabon, M.E. (2010). “Using Automated Facial Expression Analysis for Emotion and Behavior Prediction.” In Doeveling, K., von Scheve, C., & Konjin, E. A. (Eds.), Handbook of Emotions and Mass Media (349-369). London/New York: Routledge.

Bailenson, J.N., Yee, N., Blascovich, J., Beall, A.C., Lundblad, N., & Jin, M. (2008). “The Use of Immersive Virtual Reality in the Learning Sciences: Digital Transformations of Teachers, Students, and Social Context.” The Journal of the Learning Sciences, 17, 102-141.

Bailenson, J.N., Pontikakis, E. D., Mauss, I.B., Gross, J.J., Jabon, M.E., Hutcherson, C.A., Nass, C., & John, O. (2008). “Real- Time Classification of Evoked Emotions using Facial Feature Tracking and Physiological Responses.” International Journal of Human Machine Studies, 66, 303-317.

Bailenson, J.N., Yee, N., Blascovich J., Guadagno R.E. (2008) “Transformed Social Interaction in Mediated Interpersonal Communication” In Konijn, E., Tanis, M., Utz, S. & Linden, A. (Eds.), Mediated Interpersonal Communication (pp. 77-99). Lawrence Erlbaum Associates.

PEOPLE BEHIND THE PROJECT

Jeremy Bailenson is founding director of Stanford University’s Virtual Human Interaction Lab, the Thomas More Storke Professor in the Department of Communication at Stanford, and a Senior Fellow at the Woods Institute for the Environment. He designs and studies virtual reality systems that allow physically remote individuals to meet in virtual space, and explores the manner in which these systems change the nature of verbal and nonverbal interaction. In particular, he explores how virtual reality can change the way people think about education, environmental.

Jeremy Bailenson is founding director of Stanford University’s Virtual Human Interaction Lab, the Thomas More Storke Professor in the Department of Communication at Stanford, and a Senior Fellow at the Woods Institute for the Environment. He designs and studies virtual reality systems that allow physically remote individuals to meet in virtual space, and explores the manner in which these systems change the nature of verbal and nonverbal interaction. In particular, he explores how virtual reality can change the way people think about education, environmental.