mediaX Thought Leaders Looking to Personalize VR Based on Eyesight

Some new technologies can be tuned to our personal characteristics, like the voice recognition on smartphones trained to recognize how we speak. But that isn’t possible with today’s virtual reality headsets. They can’t account for differences in vision, which can make watching VR less enjoyable or even cause headaches or nausea.

Researchers at Stanford’s Computational Imaging Lab, working with a Dartmouth College scientist, are developing VR headsets that can adapt how they display images to account for factors like eyesight and age that affect how we actually see. “Every person needs a different optical mode to get the best possible experience in VR,” said Gordon Wetzstein, assistant professor of electrical engineering.

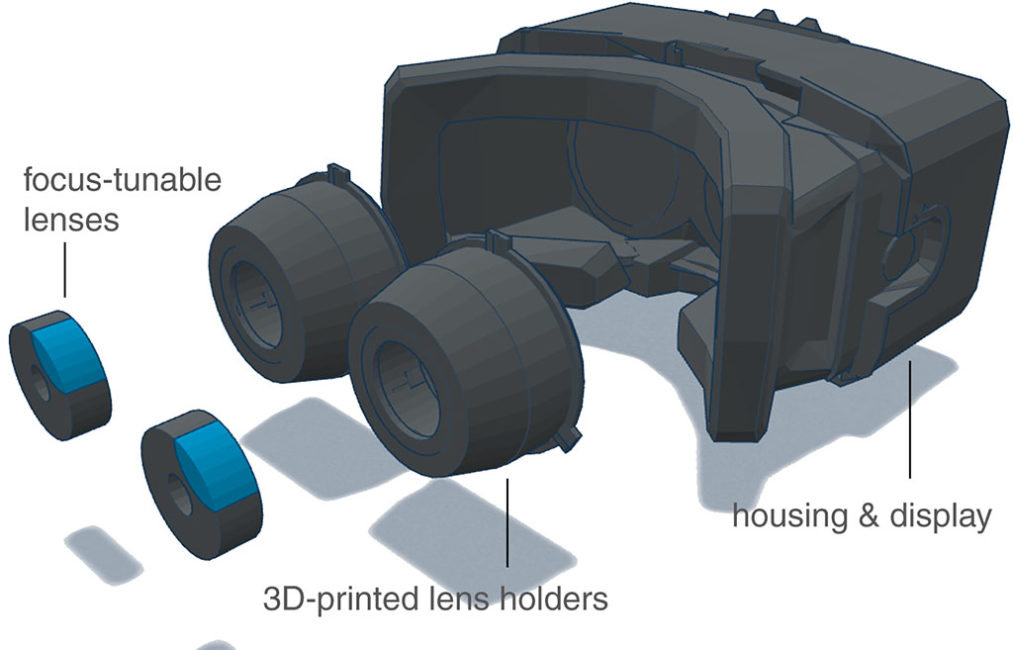

The researchers are testing hardware and software fixes designed to change the focal plane of a VR display. They call this technology adaptive focus display.

“Over a 30- to 40-minute period, your eyes may start hurting, you might have a headache,” said Nitish Padmanaban, a PhD student in electrical engineering at Stanford and member of the research team. “You might not know exactly why something is wrong but you’ll feel it. We think that’s going to be a negative thing for people as they start to have longer and better VR content.”

Read the entire Stanford Report Story from Vignesh Ramachandran HERE.